ABOUT US

Founded in Singapore in 2014, Vantage Point Security quickly established an enviable reputation for technical excellence in providing comprehensive security testing services for the protection of business critical digital assets and confidential information. Our proven expertise in cloud and mobile technology security testing makes us the partner of choice for a growing number of blue-chip organizations that place a high value in making security an important pillar in their strategic business digital transformation initiatives. learn more...

CREST ACCREDITED

Our People

SECURITY compliance

What We Do

Penetration Testing

Identify security vulnerabilities and weaknesses in applications, networks and cloud infrastructure the same way threat actors would attack your business-critical digital systems.

Managed Services

Scale your security testing program for unlimited on demand security testing that optimizes your security budget, maximizes operational efficiencies and delivers outstanding security outcomes.

Red Team Exercises

Conducting Red Team exercises to simulate attack scenarios that may occur if an organization were targeted by an Advanced Persistent Threat such as a nation state or sophisticated criminal organisation.

Phishing Simulation

Phishing campaigns designed to evaluate staff awareness regarding the dangers of potentially malicious email messages and attachments.

Mobile Security Testing

Mobile application security testing aligned to the OWASP Mobile Security Testing Guide (MSTG) with coverage of features unique to the iOS and Android ecosystems.

Security Training

Train your developers to build security into your applications as they develop. Building secure applications from the beginning reduces the costs and delays for remediating security problems at the end of development.

Smart Contracts and Cryptocurrencies

Detailed contract auditing for Ethereum, Algorand and other proprietary blockchains. We can provide end-to-end security for your crypto project including both on-chain and off-chain assets and oracles.

Security Solutions

Vantage Point partners with world’s leading security technology companies for the best in breed security solutions.

Who We Work With

Banking and Finance

Utility Providers

Telecommunications

E-Commerce

Healthcare Providers

FINTech Solutions

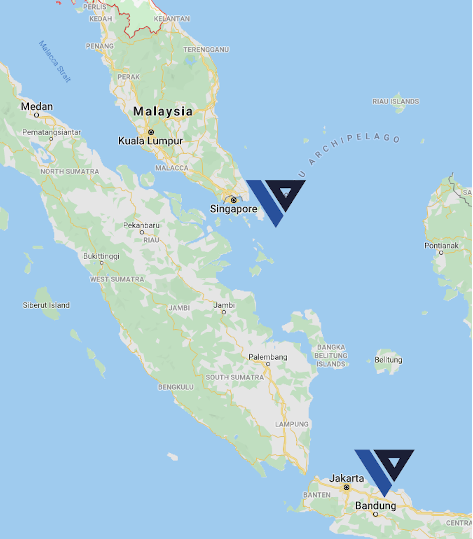

Our Office Locations

Singapore

Vantage Point Security Pte Ltd

12 Tai Seng Link, #04-01A

Singapore 534233

+65 6282 8024

sales@vantagepoint.sg

Indonesia

PT Vantage Point Security Indonesia

GD World Trade Center 5

LT. 7 JL JEND Sudirman Kav. 29 RT 008 RW 003 Jakarta Selatan DKI.

Jakarta, Indonesia 12920

+62 215211235

sales@vantagepoint.co.id